TD-SFC: A new model of speech motor control

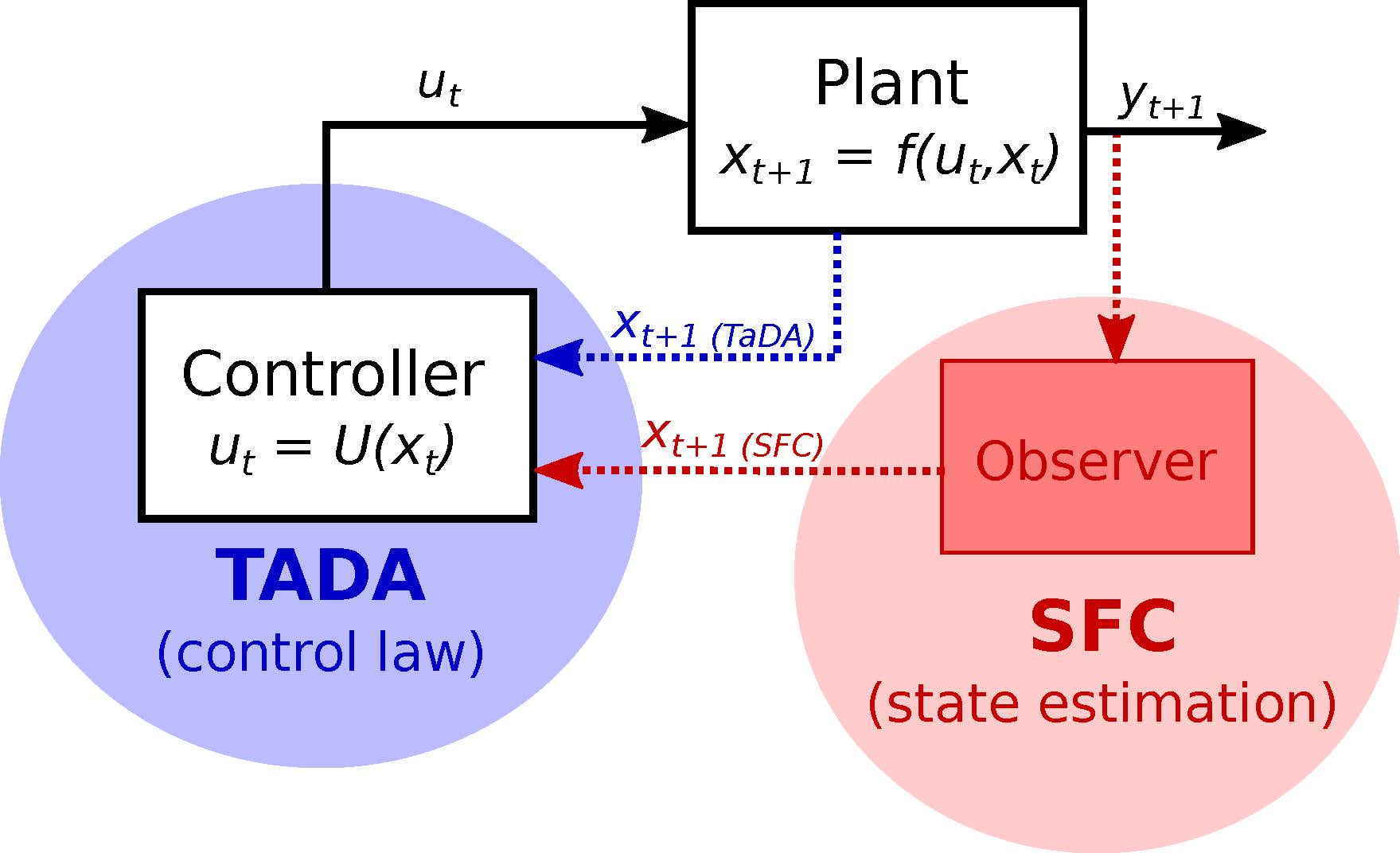

We recently proposed a new model of speech motor control (TD-SFC) based on articulatory goals that explicitly incorporates acoustic sensory feedback using a framework for state-based control. We do this by combining two existing, complementary models of speech motor control – the Task Dynamics model and the State Feedback Control model of speech. We demonstrate the effectiveness of the combined model by simulating a simple formant perturbation study, and show that the model qualitatively reproduces the behavior of online compensation for unexpected perturbations reported in human subjects.

For more details, please refer to the following publication:

Vikram Ramanarayanan, Benjamin Parrell, Louis Goldstein, Srikantan Nagarajan and John Houde (2016). A new model of speech motor control based on task dynamics and state feedback, in proceedings of: Interspeech 2016, San Francisco, CA, Sept 2016 [pdf].

Articulatory movement primitives

Articulatory movement primitives may be defined as a dictionary or template set of articulatory movement patterns in space and time, weighted combinations of the elements of which can be used to represent the complete set of coordinated spatio-temporal movements of vocal tract articulators required for speech production.

We designed a computational approach to derive interpretable movement primitives from speech articulation data. We proposed a convolutive Nonnegative Matrix Factorization algorithm with sparseness constraints (cNMFsc) to decompose a given data matrix into a set of spatiotemporal basis sequences and an activation matrix, as seen in the figure. The algorithm optimizes a cost function that trades off the mismatch between the proposed model and the input data against the number of primitives that are active at any given instant. Our results suggest that the proposed algorithm extracts movement primitives from human speech production data that are linguistically interpretable. Such a framework might aid the understanding of longstanding issues in speech production such as motor control and coarticulation.

The figure on the left shows an example of how scaled and shifted versions of different primitives can be used to reconstruct a sequence of real-time MRI data input to the algorithm (the activation matrix determines the scaling).

For more details, please refer to the following publications:

Vikram Ramanarayanan, Louis Goldstein, and Shrikanth S. Narayanan (2013), Articulatory movement primitives – extraction, interpretation and validation, in: Journal of the Acoustical Society of America, 134:2 (1378-1394) [link].

Vikram Ramanarayanan, Athanasios Katsamanis and Shrikanth Narayanan (2011). Automatic data-driven learning of articulatory primitives from real-time MRI data using convolutive NMF with sparseness constraints, in proceedings of: Interspeech 2011, Florence, Italy, Aug 2011 [pdf].

Vikram Ramanarayanan, Maarten Van Segbroeck and Shrikanth Narayanan (2013). On the nature of data-driven primitive representations of speech articulation, in proceedings of: Interspeech 2013 Workshop on Speech Production in Automatic Speech Recognition (SPASR), Lyon, France, Aug 2013 [pdf].

Control primitives

We can also extend the computational approach and ideas presented above to a control systems framework. However, it is not clear whether finding such a dictionary of primitives can be useful for illuminating speech motor control, particularly in finding a low-dimensional subspace for such control. Specifically, we present a data-driven approach to extract a spatio-temporal dictionary of control primitives (sequences of control parameters), which can then be used to control the vocal tract dynamical system to produce any desired sequence of movements.

First, we use the iterative Linear Quadratic Gaussian (iLQG) algorithm to derive a set of control inputs to a dynamical systems model of the vocal tract that produces a desired movement sequence. Second, we use the cNMFsc algorithm to find a small dictionary of control input ‘‘primitives’’ that can be used to drive said dynamical systems model of the vocal tract to produce the desired range of articulatory movement.

We show, using both qualitative and quantitative evaluation on synthetic data produced by an articulatory synthesizer, that such a method can be used to derive a small number of control primitives that produce linguistically-interpretable and ecologically-valid movements. Such a primitives-based framework could help inform theories of speech motor control and coordination.

For more details, please refer to the following publications:

Vikram Ramanarayanan, Louis Goldstein and Shrikanth Narayanan (2014). Speech motor control primitives arising from a dynamical systems model of vocal tract articulation, in proceedings of: Interspeech 2014, Singapore, Sept 2014 [pdf].

Vikram Ramanarayanan, Louis Goldstein and Shrikanth Narayanan (2014). Speech motor control primitives arising from a dynamical systems model of vocal tract articulation, in proceedings of: International Seminar on Speech Production 2014, Cologne, Germany, May 2014 [pdf]. (Northern Digital Inc. Excellence Award for Best Paper)

Articulatory Setting and Mechanical Advantage

Articulatory setting (also called phonetic setting or organic basis of articulation or voice quality setting) may be defined as the set of postural configurations (which can be language-specific and speaker-specific) that the vocal tract articulators tend to be deployed from and return to in the process of producing fluent and natural speech. We found that on average, postures assumed at absolute rest (such as when the subject is not speaking) are significantly different than postures assumed during pauses in speech (such as those at a comma or a period).

Further, we found that postures assumed during pauses are significantly more mechanically advantageous as compared to postures assumed during absolute rest. Further, the postures assumed during various vowels and consonants lie in between this continuum.

For more details, please refer to the following publications:

Vikram Ramanarayanan, Louis Goldstein, Dani Byrd and Shrikanth S. Narayanan (2013), A real-time MRI investigation of articulatory setting across different speaking styles, in: Journal of the Acoustical Society of America, 134:1 (510-519) [link].

Vikram Ramanarayanan, Adam Lammert, Louis Goldstein, and Shrikanth S. Narayanan (2014), Are Articulatory Settings Mechanically Advantageous for Speech Motor Control?, in: PLoS ONE, 9(8): e104168. doi:10.1371/journal.pone.0104168. [link].

Vikram Ramanarayanan, Adam Lammert, Louis Goldstein and Shrikanth Narayanan (2013). Articulatory settings facilitate mechanically advantageous motor control of vocal tract articulators, in proceedings of: Interspeech 2013, Lyon, France, Aug 2013 [pdf].

Vikram Ramanarayanan, Dani Byrd, Louis Goldstein and Shrikanth Narayanan (2011). An MRI study of articulatory settings of L1 and L2 speakers of American English, in: International Speech Production Seminar 2011, Montreal, Canada, June 2011 [pdf].